Enterprise Selection Patterns Reveal Critical Product Management Insights

The artificial intelligence coding assistant market has evolved from a novel experiment into a strategic imperative for engineering organizations. Recent data reveals a fascinating paradox: the fastest tool isn’t winning the enterprise, and market leadership depends more on organizational size than raw technical capabilities. For product managers navigating build-versus-buy decisions and tool selection, understanding these dynamics is essential.

The competitive landscape has crystallized around three major players: GitHub Copilot, Claude Code, and Cursor. Each represents a distinct approach to AI-assisted development, and their adoption patterns reveal critical insights about how enterprises make technology decisions in the AI era.

The Enterprise Divide: Size Determines Strategy

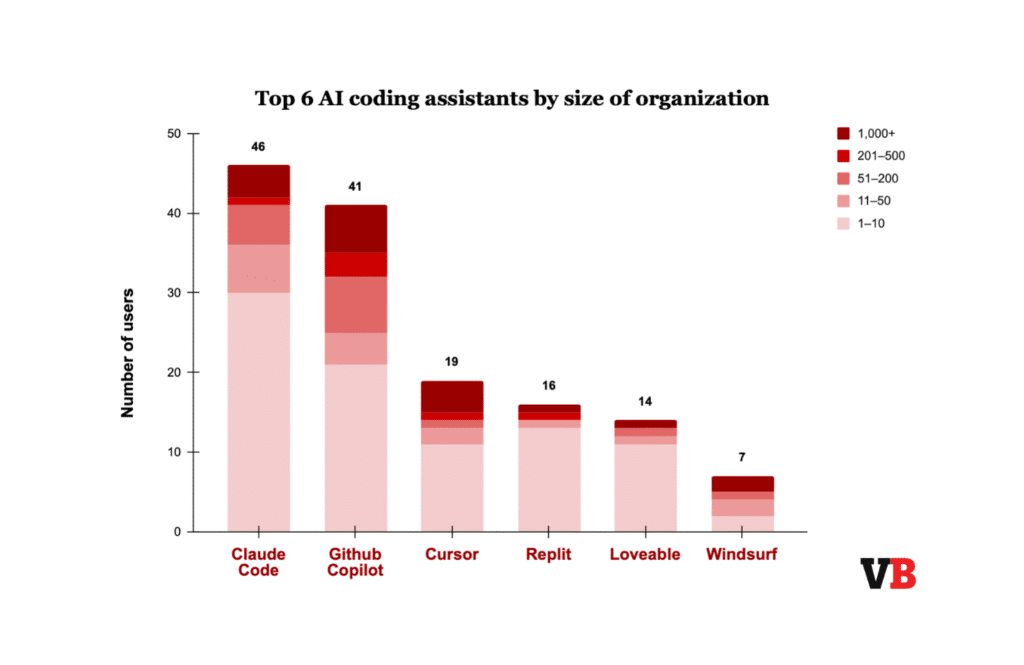

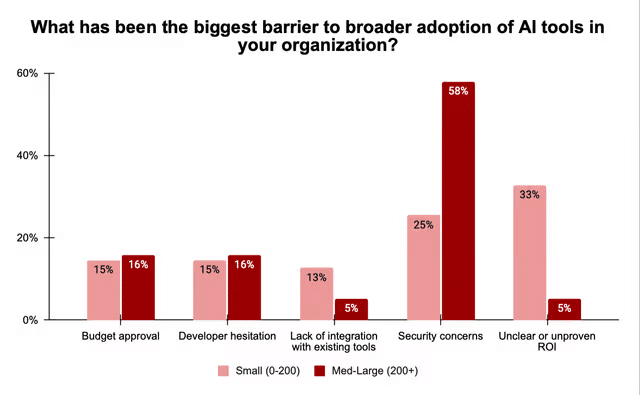

One of the most striking findings in the current AI coding assistant landscape is how dramatically company size influences platform selection. Larger enterprises with 200 or more employees demonstrate a strong preference for GitHub Copilot, while smaller teams increasingly gravitate toward newer platforms like Claude Code, Cursor, and Replit.

This segmentation isn’t arbitrary—it reflects fundamental differences in organizational priorities. For product managers, this size-based split illuminates a crucial insight: enterprise governance requirements drive platform selection more than raw capabilities. The implication is profound: when evaluating tools for your organization, technical performance metrics tell only part of the story.

Large enterprises operate under constraints that smaller companies simply don’t face. Compliance frameworks, security audits, procurement processes, and integration requirements create a decision-making environment where stability and established vendor relationships often outweigh cutting-edge features. GitHub Copilot, backed by Microsoft’s enterprise infrastructure, naturally aligns with these needs.

Conversely, smaller organizations can move faster and take calculated risks on newer technologies. They’re optimizing for different variables: rapid iteration, developer experience, and competitive advantage through early adoption. This flexibility allows them to experiment with Claude Code and Cursor without the bureaucratic overhead that would delay or prevent such decisions in larger organizations.

Security Concerns: The Primary Enterprise Blocker

Security concerns dominate the adoption barriers for medium-to-large teams. An overwhelming 58% of organizations with 200 or more employees cite security as their biggest obstacle to AI coding assistant adoption. This statistic should resonate deeply with product managers in the enterprise space.

The security concern isn’t merely about data protection—it encompasses code ownership, intellectual property rights, compliance with industry regulations, and liability for AI-generated code. Enterprise product managers must anticipate these concerns when introducing any AI tool into their technology stack. The questions aren’t technical; they’re organizational and legal.

For smaller organizations, the barrier landscape looks remarkably different. Thirty-three percent identify “unclear or unproven ROI” as their primary obstacle. This reveals a resource allocation challenge: smaller companies must justify every tool investment more rigorously because each decision represents a larger percentage of their budget and technical capacity.

Product managers in smaller organizations face a different challenge: proving value quickly and demonstrably. Unlike enterprises that can absorb experimental tools across large engineering teams, smaller companies need immediate productivity gains to justify the investment.

Claude Dominates Code Generation Market Share

Perhaps the most significant finding for product strategists is Claude’s commanding market position. Anthropic’s Claude commands 42% of the code generation market—more than double OpenAI’s 21% share, according to a Menlo Ventures survey of 150 enterprise technical leaders.

This dominance challenges conventional wisdom about first-mover advantage in AI markets. GitHub Copilot, launched earlier and backed by Microsoft’s massive distribution power, doesn’t lead in market share for code generation capabilities. Instead, Claude’s superior code quality and reasoning abilities have carved out a dominant position.

For product managers, this demonstrates that in AI markets, technical excellence can overcome distribution advantages. The implication for product strategy is clear: in rapidly evolving technology categories, the best product can still win even against entrenched competitors with superior distribution.

Claude’s market leadership has translated into remarkable financial performance for Anthropic. The company has tripled the number of eight and nine-figure enterprise deals signed in 2025 compared to all of 2024, reflecting broader enterprise adoption beyond its coding stronghold. This growth trajectory validates the strategic importance of AI coding assistants in enterprise technology stacks.

Speed Doesn’t Equal Success: The GitHub-Claude Performance Paradox

The title insight—”Cursor’s speed can’t close”—reveals a critical lesson for product managers: raw performance metrics don’t determine market outcomes. GitHub Copilot achieved the fastest time-to-first-code at just 17 seconds during security vulnerability detection tasks. Claude Code, by comparison, required 36 seconds—more than twice as long.

Yet despite this speed disadvantage, Claude Code maintains significant enterprise traction. The reason? Enterprise advantages that matter more than milliseconds. These advantages likely include superior code quality, better reasoning about complex architectural decisions, more accurate security recommendations, and fewer false positives in code suggestions.

This paradox should inform every product roadmap discussion. Speed is important, but it’s rarely the only—or even primary—factor in enterprise adoption. Product managers often obsess over performance benchmarks, but customers evaluate solutions holistically. A slower tool that produces more reliable, maintainable, and secure code creates more value than a faster tool that requires extensive review and revision.

Cursor, despite its performance advantages, faces challenges closing the gap with established players. This suggests that beyond initial performance metrics, factors like ecosystem integration, enterprise support, and platform maturity heavily influence adoption decisions.

The ROI Challenge for Product Managers

The finding that 33% of smaller organizations cite unclear ROI as their primary barrier deserves deeper examination. For product managers, this reveals a critical go-to-market challenge: the value proposition for AI coding assistants remains difficult to quantify.

Unlike traditional software tools with clear productivity metrics—lines of code written, bugs fixed, features shipped—AI coding assistants impact development in nuanced ways. They may not dramatically increase output but could significantly improve code quality, reduce cognitive load, accelerate onboarding, or enable junior developers to tackle more complex tasks.

Product managers introducing these tools must develop new frameworks for measuring value. Traditional metrics like developer productivity are notoriously difficult to measure and can be gamed. More sophisticated approaches might track:

- Time saved on routine coding tasks, allowing developers to focus on architectural decisions

- Reduction in security vulnerabilities introduced during development

- Faster onboarding time for new team members

- Increased consistency in code style and patterns across the codebase

- Developer satisfaction and reduced burnout from repetitive tasks

The ROI uncertainty also creates an opportunity. Organizations that develop robust measurement frameworks for AI coding assistant value can make better-informed decisions and potentially gain competitive advantages through more sophisticated tool adoption strategies.

Strategic Implications for Product Development

These market dynamics reveal several strategic implications for product managers across the technology landscape:

Platform Selection Requires Organizational Self-Awareness: Before evaluating specific tools, product managers must honestly assess their organization’s constraints. Are security and compliance requirements paramount? Does your organization prioritize speed to market over established vendor relationships? Understanding these factors shapes which solutions merit serious consideration.

Integration Matters More Than Features: The success of GitHub Copilot in enterprises isn’t primarily about its technical capabilities—it’s about seamless integration with existing developer workflows, IDE support, and alignment with enterprise IT infrastructure. When evaluating tools, assess integration complexity as rigorously as feature sets.

Developer Experience Drives Adoption: Regardless of executive enthusiasm, AI coding assistants succeed or fail based on developer adoption. The best tools become part of developers’ natural workflows. Product managers should involve engineering teams early in evaluation processes and prioritize tools that developers actually want to use.

Market Leadership Is Fluid: Claude’s rapid rise to market dominance demonstrates that AI markets remain dynamic. For product managers, this means continuous evaluation of the landscape. The leader today may not lead tomorrow, and being locked into a specific platform creates strategic risk.

Quality Trumps Speed in Enterprise Contexts: The GitHub-Claude performance comparison reveals that enterprises value reliability, accuracy, and reduced risk over raw speed. This insight extends beyond coding assistants to nearly any enterprise product decision. Fast is good; correct is better.

The Broader Implications for AI Tooling Strategy

The AI coding assistant market serves as a microcosm for broader trends in enterprise AI adoption. The patterns observed here—size-based segmentation, security-driven decision-making, quality over speed, and market leadership fluidity—likely apply across many AI tool categories.

Product managers should anticipate similar dynamics when evaluating AI tools for data analysis, content creation, customer support, or any other function. The lessons learned from coding assistant adoption can inform strategy across the organization’s AI portfolio.

Additionally, these findings suggest that organizations should develop AI tool evaluation frameworks that extend beyond traditional software assessment criteria. Security, privacy, IP protection, integration complexity, and change management deserve equal weight with performance benchmarks and feature checklists.

Building Internal Capabilities

The data on adoption barriers reveals another strategic consideration: organizations need internal expertise to evaluate, deploy, and measure AI tool effectiveness. The ROI uncertainty plaguing smaller organizations often stems from lack of frameworks for assessment rather than lack of tool value.

Product managers should consider investing in:

- Internal AI literacy programs to help teams understand capabilities and limitations

- Frameworks for measuring AI tool impact beyond simple productivity metrics

- Pilot programs that allow controlled evaluation before full deployment

- Cross-functional working groups that include legal, security, and engineering perspectives

These capabilities become strategic assets, enabling faster and more confident AI tool adoption decisions across the organization.

The Competitive Landscape Evolution

The AI coding assistant market continues evolving rapidly. GitHub has steadily iterated on Copilot, adding support for multiple language models including Claude, Google’s Gemini, and OpenAI’s GPT-4o. This multi-model approach suggests a future where platforms matter more than individual models—users select tools based on integration and experience rather than which specific AI powers them.

Microsoft’s emphasis on agentic AI and GitHub’s introduction of agent modes indicate the next evolution: AI coding assistants that don’t just suggest code but understand broader project context, make architectural decisions, and handle multi-step development tasks autonomously.

For product managers, this evolution demands attention. The tools emerging today will fundamentally change how software gets built, which impacts roadmap planning, team structure, skill requirements, and competitive positioning.

Practical Recommendations

Based on these findings, product managers should:

- Assess Your Organization’s Profile: Determine whether your company’s size, risk tolerance, and governance requirements align more with enterprise-focused solutions like GitHub Copilot or emerging platforms like Claude Code and Cursor.

- Prioritize Security and Compliance Early: For medium-to-large organizations, address security concerns before evaluating features. Engage legal and security teams early to understand constraints and requirements.

- Develop ROI Frameworks: Create measurement approaches that capture AI coding assistant value beyond simple productivity metrics. Focus on quality, developer satisfaction, and strategic capabilities enabled by the tools.

- Plan for Multi-Model Futures: Rather than betting entirely on one platform or model, consider strategies that maintain flexibility. GitHub’s multi-model approach may represent the future—platforms that allow switching models based on task requirements.

- Invest in Change Management: Tool adoption fails without proper training, clear value communication, and cultural support. Budget time and resources for helping teams effectively integrate these tools into workflows.

- Monitor Market Evolution: The AI coding assistant landscape remains dynamic. Establish processes for regularly reassessing tools and staying informed about new capabilities and competitors.

- Start Small, Scale Deliberately: Begin with pilot programs in lower-risk contexts. Gather data, refine processes, and build confidence before organization-wide deployment.

Conclusion

The AI coding assistant market reveals fundamental truths about enterprise technology adoption in the AI era. Speed matters less than reliability. Distribution advantages can be overcome by technical excellence. Organizational size creates distinct needs and constraints. Security concerns dominate enterprise decisions while ROI uncertainty challenges smaller organizations.

For product managers, these insights extend far beyond selecting a coding assistant. They illuminate how organizations make technology decisions, what factors drive adoption, and how AI tools succeed or fail in real-world contexts. Understanding these dynamics positions product leaders to make better-informed decisions across their AI tool portfolio.

The competition between GitHub, Claude, and Cursor isn’t just about which tool writes code faster or better—it’s about understanding organizational needs, aligning tool capabilities with real-world constraints, and recognizing that the best product doesn’t always win without considering the full context of enterprise decision-making.

As AI continues transforming software development and countless other domains, the lessons from this market will only grow more relevant. Product managers who internalize these insights will be better equipped to navigate the complex, rapidly evolving landscape of enterprise AI adoption.

Methodology Notes

The insights in this analysis draw from several key research sources:

Menlo Ventures Survey: The market share data for AI code generation comes from a comprehensive survey conducted by Menlo Ventures involving 150 enterprise technical leaders. This survey methodology provides direct insights from decision-makers actively evaluating and deploying AI coding assistants in production environments.

Organizational Size Segmentation: The research categorizes organizations into distinct size bands, with 200+ employees serving as the threshold for medium-to-large enterprises. This segmentation enables analysis of how adoption patterns, barriers, and priorities vary across different organizational scales.

Performance Benchmarking: Time-to-first-code metrics were measured during specific coding tasks, including security vulnerability detection scenarios. These benchmarks provide quantitative comparisons of response times across platforms, though the specific testing methodology details (such as task complexity, code base size, and testing environment specifications) were not fully detailed in available sources.

Adoption Barrier Analysis: The security concerns and ROI uncertainty statistics derive from surveys asking organizations to identify their primary obstacles to AI coding assistant adoption. The methodology appears to allow respondents to select their top concern from a predetermined list of common barriers.

Financial and Growth Metrics: Data on eight and nine-figure enterprise deals and revenue growth comes from Anthropic’s corporate disclosures and analysis of publicly available financial information, supplemented by reporting from sources close to the company.

It’s important to note that the AI coding assistant market remains highly dynamic, with new tools launching, existing platforms adding features, and adoption patterns evolving rapidly. The data reflects snapshots from specific time periods in 2024-2025 and should be considered within that context. Organizations evaluating these tools should conduct their own assessments based on current capabilities and their specific requirements.

FAQ

Why do larger enterprises prefer GitHub Copilot?

Larger enterprises (200+ employees) select GitHub Copilot because it aligns with Microsoft’s enterprise infrastructure. Compliance, procurement, and integration requirements matter more than speed or novelty.

Why is Claude leading the code generation market?

Claude commands 42% market share because of its superior code quality, reasoning, and fewer false positives. This technical edge outweighs GitHub Copilot’s first-mover and distribution advantages.

Why doesn’t Cursor’s speed guarantee adoption?

Cursor is fastest to first code, but enterprises prioritize maintainability, security, and ecosystem maturity. Reliability and integration weigh more heavily than milliseconds of speed.

What are the biggest adoption barriers for organizations?

Enterprises cite security as the #1 blocker, covering IP, compliance, and liability. Smaller firms struggle with unclear ROI and must justify AI tools with immediate gains.

What should product leaders focus on when evaluating AI coding assistants?

Product leaders should assess organizational size and risk profile, address security and compliance early, create ROI frameworks, and plan for multi-model futures with change management in mind.